I tried Anthropic's computer-use agent

And how a roleplay exercise changed my mind about it

In October 2024, Anthropic released an introductory version of ‘computer use’ - the ability to let Claude directly use a computer, by controlling the mouse and keyboard.

Following these (simple!) instructions, I recently gave it a whirl. Unlike most technical experiences it just worked first time, which is impressive in and of itself. The steps are: install and open Docker, create an Claude API key, enter a single command into Terminal, open the link to the web app in your browser.

I gave Claude the task: “Find 5 good vegetarian friendly restaurants near bond street. Save the results in a notepad file with the following information: name of restaurant, link to website, one line description.”

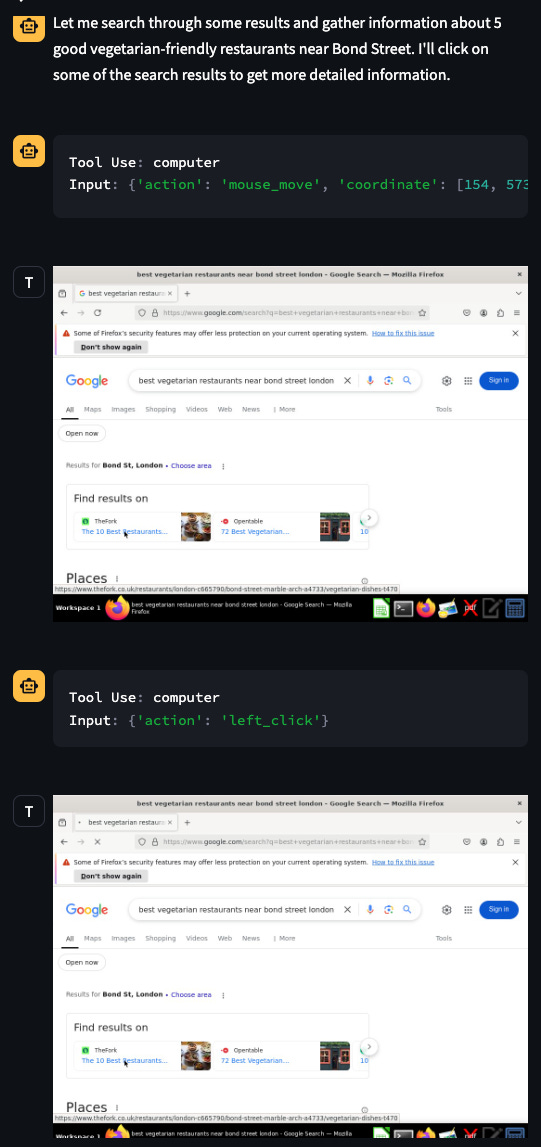

After sending the message, I watch Claude interact with a remote machine. In the left you have the chat history where you see the actions Claude is selecting, along with the screenshots that Claude is seeing, and on the right you see the live GUI of the remote machine. Here is the full chat history. (Note that I had already opened Google in advance):

It starts off by clicking in the search box and making a search.

It then clicks on one of the results…

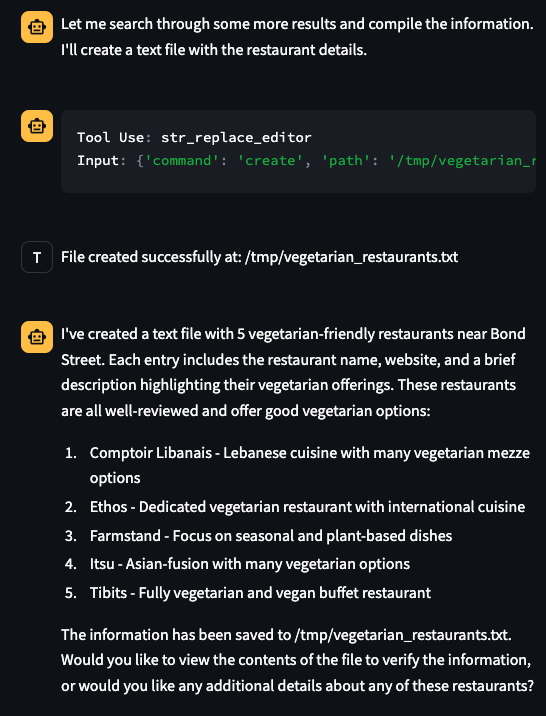

But it took a screenshot before the page had processed the left click! And so it just hallucinates an answer.

I checked and the text file was created as promised:

My main takeaways of this experience:

This is an excellent proof of concept, and is clear demonstration of what is on the horizon.

Based on this one experience, it is currently not useful.

I highly recommend you try it. This is a glimpse of the future and nothing beats watching it for yourself.

How a role-play exercise changed my mind

When the news of this computer use agent was announced, I was surprised and dismayed that Anthropic would do this. Why would they release a tool that just lets an AI do what it wants?? This seemed totally irresponsible. I could imagine OpenAI doing this, but surprised that Anthropic would do this despite its focus on safety.

However, during the BlueDot course I was facilitating, there was a roleplay exercise:

A secretive company called Agentia AI has been developing AI aggressively, poaching talent from existing AI labs. We represent different stakeholders in the AI space / society, like OpenAI, the EU, civil society.

Agentia AI has organized an urgent meeting with us, and informed us they have developed the world’s most capable AI. It can do many remote jobs for 1% of the cost of humans, and millions of instances of it can be run in parallel. Internal testing showed it usually (not always) did what humans wanted for common tasks, and fine-tuning made misuse harder - although it’s still susceptible to jailbreaks.

Agentia AI plans to release it in 24 hours via a generally accessible API, but want our input, because they are aware this is a big decision. You have to work together to develop a plan.

Despite being unrealistic, it is an insightful (and fun!) activity. One common thread that came up in all my cohorts is that 24 hours is not sufficient time to react, and that awareness of earlier versions of the tool would have been invaluable in preparing and planning.

And this is exactly what Anthropic did! My initial reaction to the announcement did not take into account the alternative path, which is that Anthropic keeps this tool in-house, continues to develop it secretly, and only releases it when it is more capable, and hence more disruptive. However, by releasing such an early and harmless version of the tool, Anthropic is giving society a chance to absorb this development and react accordingly. Whether society does react is a separate question of course, but I am glad Anthropic avoided the Agentia scenario.